Image, vision & interaction

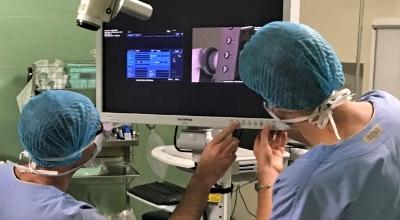

New audiovisual formats increase immersion in content. They transform users's experience, plunging them right into the heart of the action or information. At the same time, advances in augmented reality, virtual reality, and volumetric video technologies are revolutionizing many sectors, offering a new perception of the environment and a tenfold increase in the possibility of interaction.

From volumetric video to cognitive analysis of people interacting with content, via compression and format conversion, b<> com's expertise covers a broad spectrum of the immersive world.

Mixed and virtual realities, intimately linked to digital twin technologies, enable radical innovations in industrial production: predictive maintenance, reduction of energy footprint, reduction of manufacturing defects, training...

Awards

-

2019

Product of the Year at NAB Show de Las Vegas

-

2017

Technology Innovation Award at NAB Show de Las Vegas